This blog will describe how to setup a connection from your Home Assistant local instance to Microsoft Fabric. With your data in Fabric, you will be able to analyse and make long term dashboards, as well as ensure that your data is stored safe and securely in the cloud.

PREREQUISITEs:

- Home Assistant running – Your Home assistant needs to deployed and running

- A Fabric Tenant – https://learn.microsoft.com/en-us/fabric/get-started/fabric-trial

Setup Fabric EventHouse

In fabric we need to have an Eventhouse. The Fabric Eventhouse is a container for all your future KQL databases.

Go To app.powerbi.com, and create a workspace if you dont already have one. (Workspaces -> + New workspace). Name you workspace and give it a description and an image.

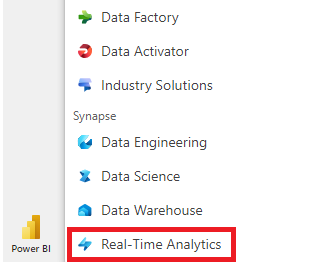

Select the Real Time Analytics experience

Go to your workspace and create a Eventhouse

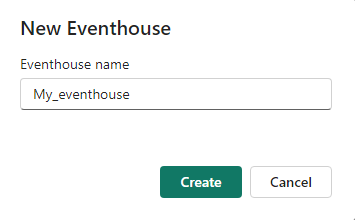

Name your Eventhouse to you liking

Setting up Eventstream

Next step is to setup the EventStram, this will create the endpoint and any inbound transformations of your data before it will write the data to the Eventhouse

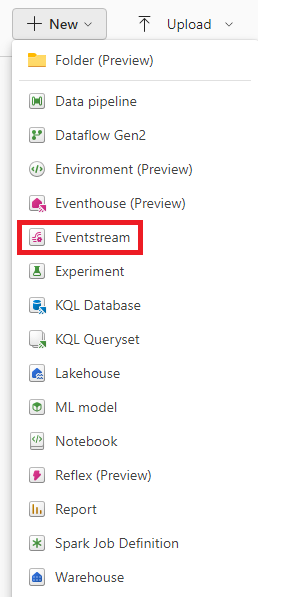

Go back to the workspace still in the Real Time Ananytics experience and select + new -> Eventstream

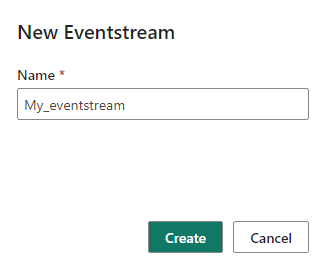

name it, and click create

Now your Eventstream is created, and almost ready to rescieve data from Home Assistant

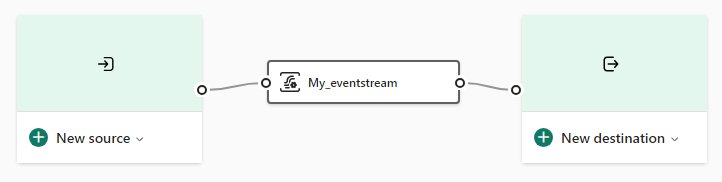

Click the + New source and select “Custom App”, Name “this “Home_Assistant” and click “Add”. This will create an endpoint that you now can send data to using different protocols.

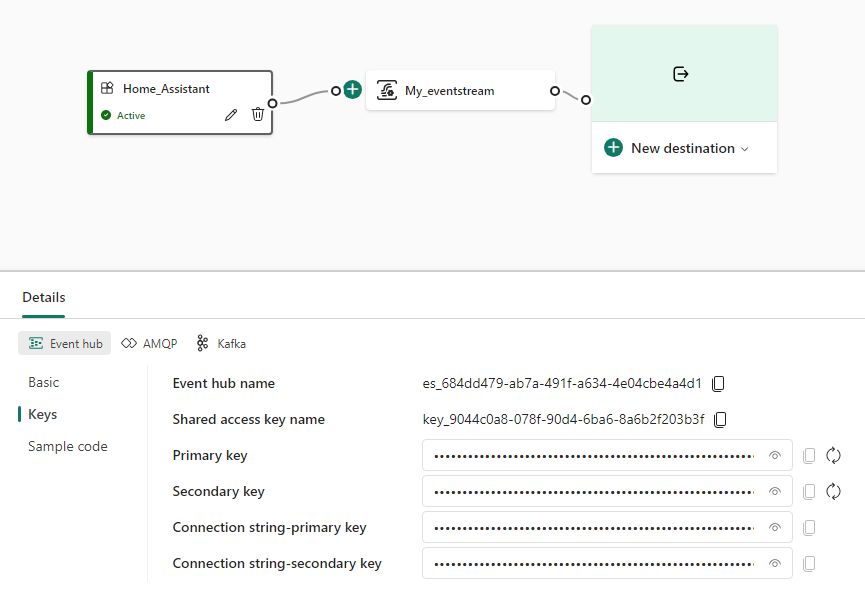

The Endpoint is now ready. Click on the Home_assistant source -> Details -> Eventhub -> Keys

Configure Home assistant

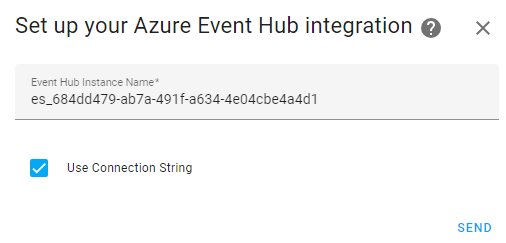

In Home Assistant, we need to setup a Azure EventHub connection, go to Settings -> Devices and Services. Click the “Add Integration” button and find Microsoft -> Azure Event hub

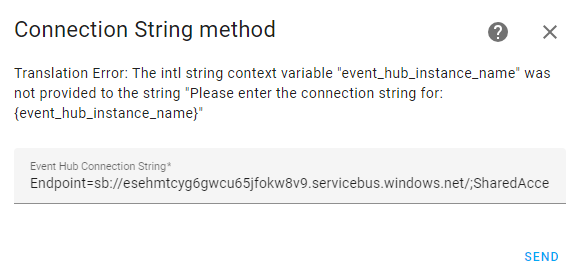

In the popup, enter the Event Hub Name from Fabric in the Event Hub Instance Name field and check the “Use Connection String” checkbox, click SEND

Now copy in the Connection string-primary key (or secondary key) into the Event Hub Connection String field

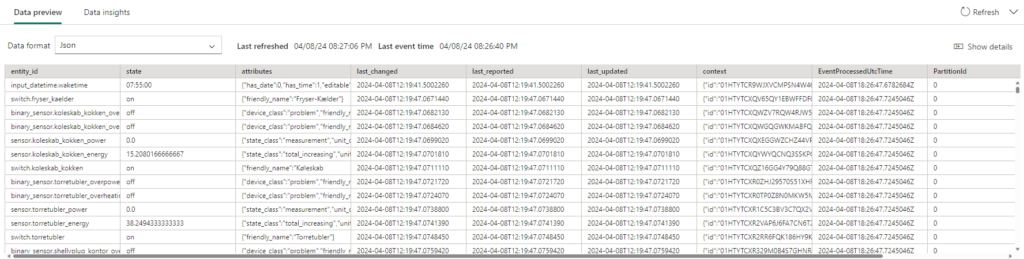

Now the connection between Home Assistant and Fabric has been established and date is streaming into fabric. In the Data preview pane in the Eventstream in fabric, data should be showing up

Creating the KQL database

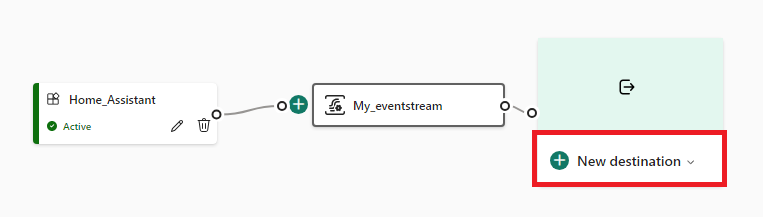

In the Eventstream, click new destination and select “KQL Database”

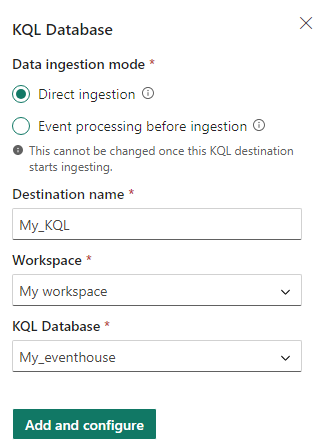

This wil open a pane to the right, defining the connection to the KQL database. Click the “Direct ingestion” button, and fill out the fields

If you need to change the schema, FX remove/rename columns, change datatypes, select the Event Processing before ingestion. This will give the option to manipulate the data before its written to the database. This is not covered in this post

And finally click “Add and Configure”. This will start a wizard for setting up the KQL database

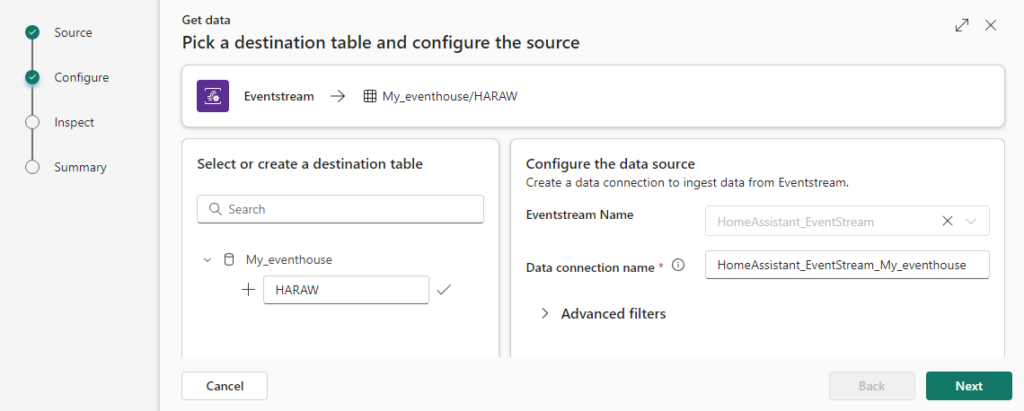

Click + New Table and name the table ex. HARAW and click NEXT

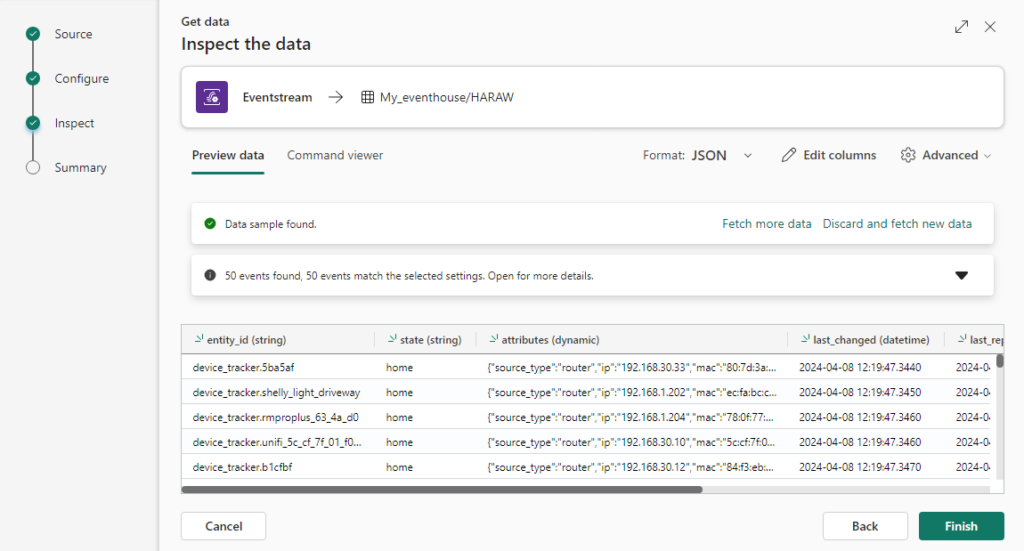

Now Fabric will check and preview the data for you to inspect

If everything looks good click “Finish”. Now the resources will be created and connected. when finished, click Close

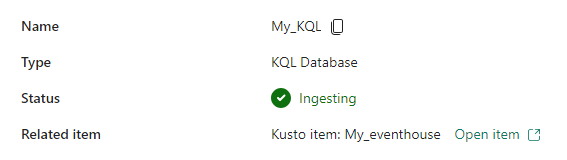

After a few seconds, the status should change to “Ingesting” with a green checkmark, and now data is steaming into you KQL Cluster.

Working with you Home Assistant data in Eventhouse

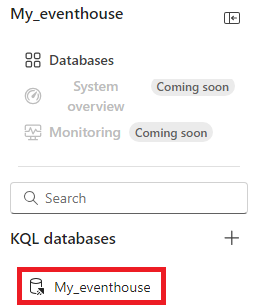

Navigate back to you Eventhouse, were you will find you new KQL Database and some metrics about you Eventhouse. Click on your KQL Database

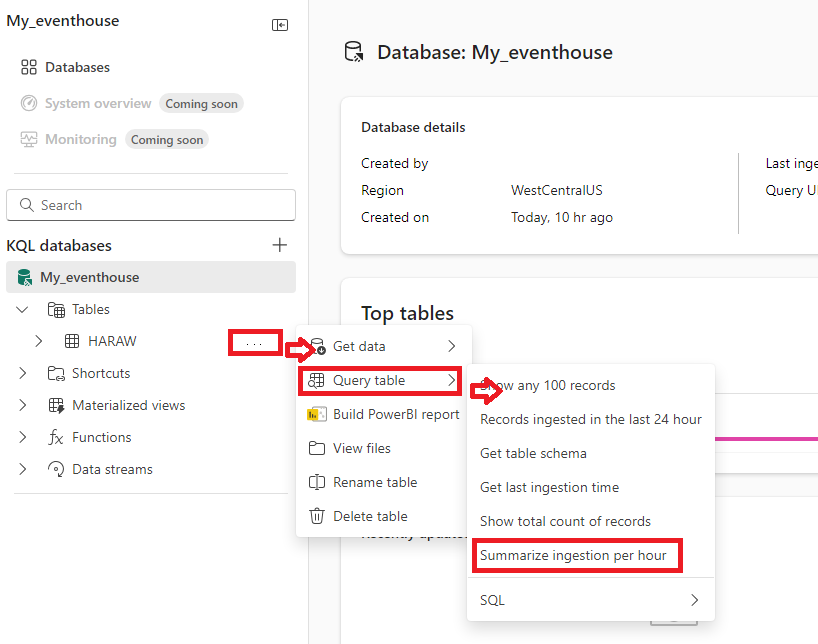

Click on the 3 dots next to the new table -> Query table -> Sumarize ingestion per hour

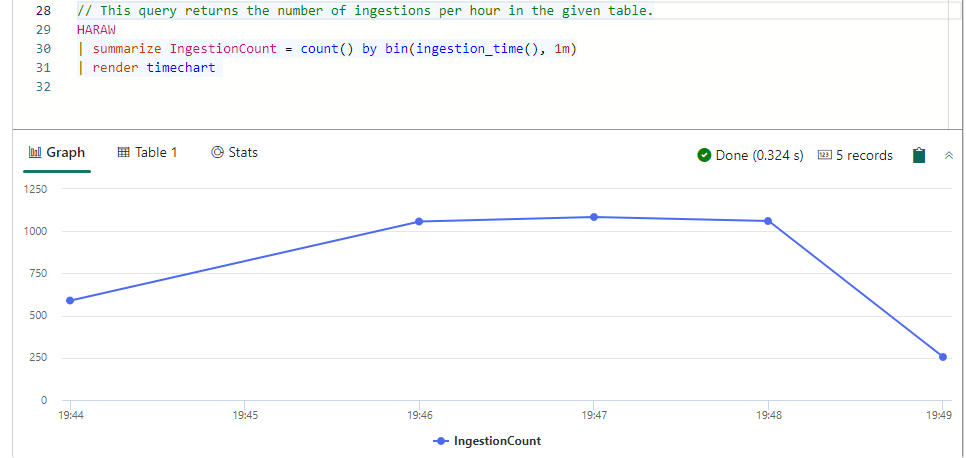

And change the query as follows:

HARAW

| summarize IngestionCount = count() by bin(ingestion_time(), 1m)

| render timechart Remeber to change the timing to 1m (1 minute) and the name of the table (HARAW) to your table name

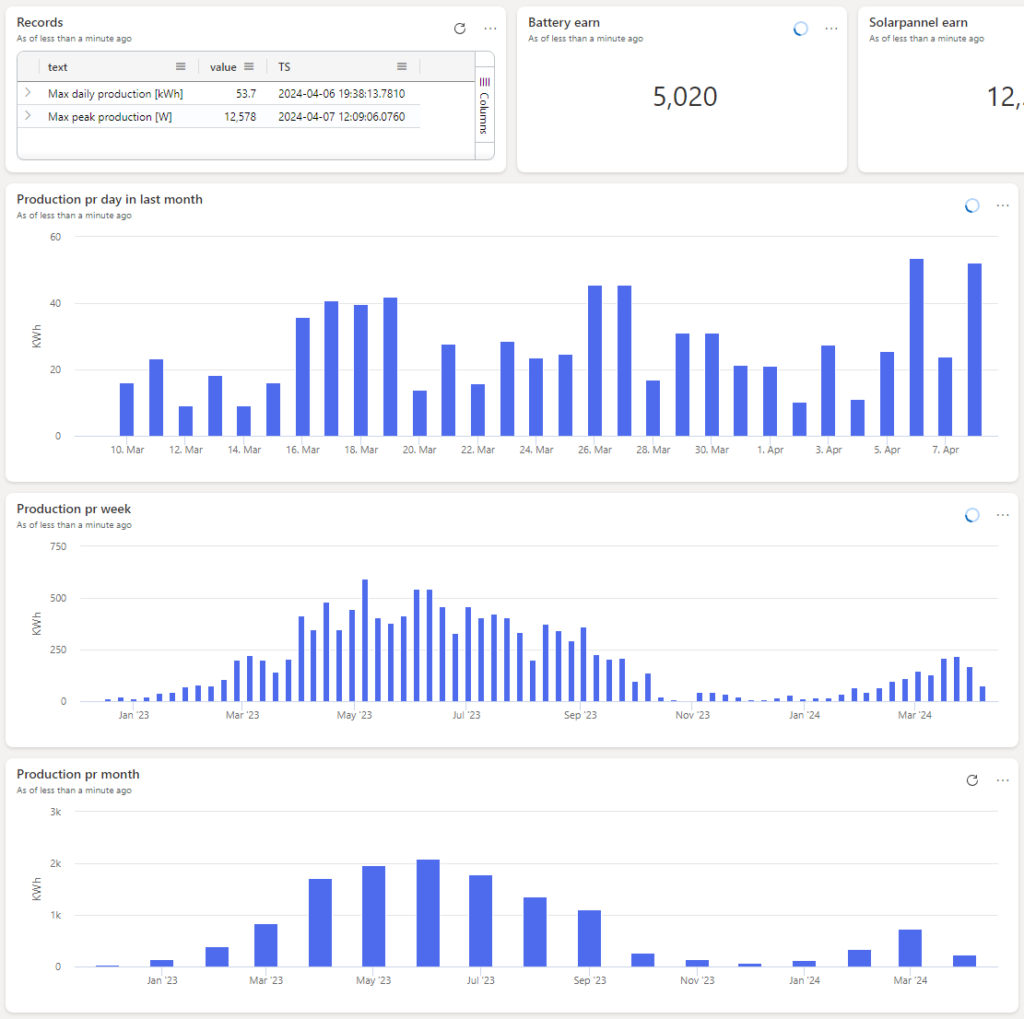

Now data is streaming in, and from here its a world of posibilities with in the KQL world for all kind of analytcs and building dashboads. even moving forward with creating functions, updata policies and materialized views. This will be explorer in further posts. but below is a few examples of dashboards, first one is a realtime dashboard from within KQL

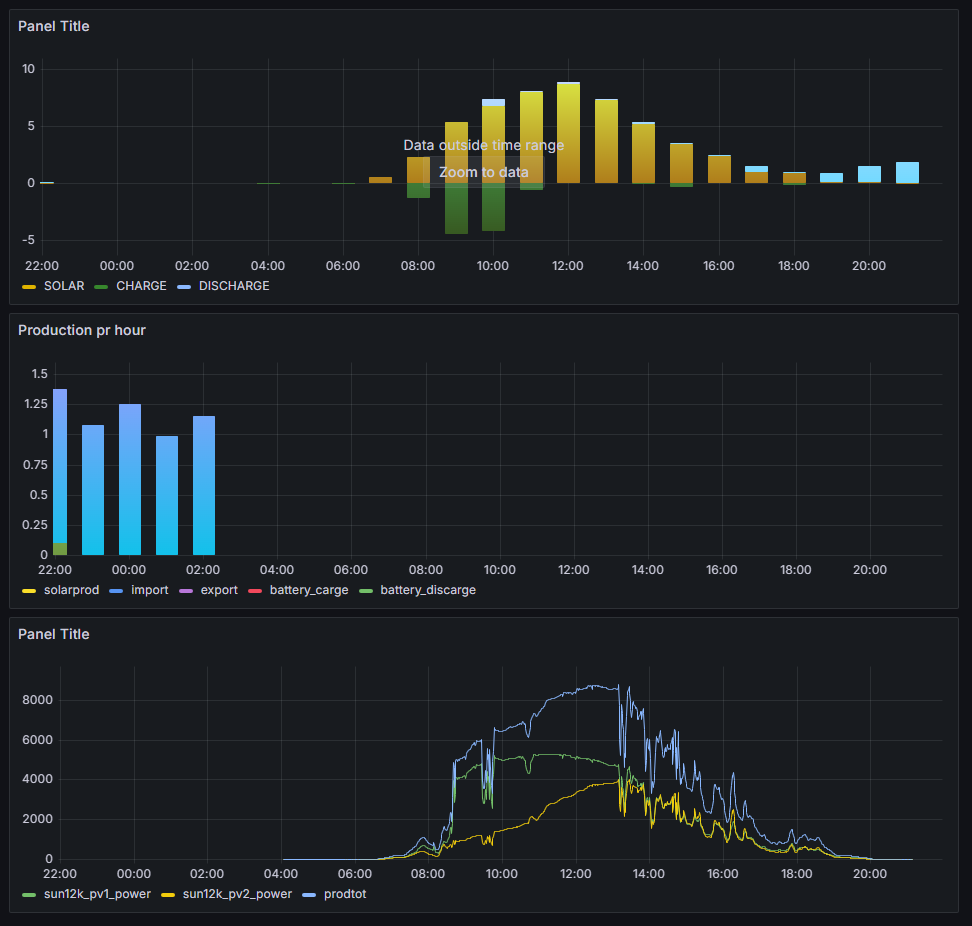

Second is a dashboard form connecting Grafana addon in Home assistant to KQL

CONCLUSION

In this post we managed to install all needed components in Microsoft Fabric and in Home Assistant, to configure these components so data streams from Home Assistant and to Real Time Analytics in Fabric.

Learn more

the best way to learn to use KQL is by start using it, here is a few links for further learning: